Article extracted from the Civiltà delle Macchine magazine, n.4 2021, focused on “The Digital Twin”

We are more and more frequently hearing the expression “digital twin” in both technical discussions and everyday jargon, with reference to the digitalisation of industrial processes. But this is not the only field in which we encounter the term: it also arises in relation to life sciences, as when we speak of digital twins of human bodies, and in the study of climate change, raising the prospect of a digital twin of the whole earth. But what exactly is a digital twin? The term first arose in engineering, referring to a computer program fed with data collected from a real system which synthetically but accurately represents the overall state of its twin in the real world (often through visualisation with 3D models, graphics, curves and dashboards). To simplify, we might say a digital twin is the equivalent of a command centre for the real twin, set up in the form of software, which can continue to operate even without the system it controls.

The digital twin concept has recently been given new meaning, as a holistic digital model of a real system. Virtual representation (once again in a computer program) can reproduce a state and state changes through the combined use of data, simulations and artificial intelligence. The holistic model arising as an extension of the digital twin is an incredibly powerful tool, because it allows us to predict things, as we will see in this article, and it is made possible largely by the calculation and data analysis power available to us today, in supercomputers or in the cloud. Its power is great enough to calculate very complicated but accurate numerical models offering an increasingly better response to the need to predict the behaviour of a system under various operating conditions, whether it be a car, a plane, a ship, an industrial plant, or even – as we have seen – a human body or our entire planet (though in these cases we are still in the study phase).

The need for use of virtual twins in industry has grown as a result of the progressive automation of processes, in which everything is managed by remote detection systems and where actions that produce a change of configuration must take place without human intervention. The availability of an accurate virtual twin is also essential for predicting the effects of a change of state, whether intentional or unintentional (due, for instance, to altered environmental conditions), to prevent malfunctions, cut production costs, train workers, and so on.

The most important element in a digital twin is the software, which processes the data and implements the functions, the values of which replicate the features of all parts of the real system (position, temperature, pressure, voltage). The software is not normally a single program, but a multicomponent model (the engine, structure, air, water) on a number of different scales (metal, metallic components, the complete airplane, a fleet of airplanes), which are assessed paired with values, influencing one another.

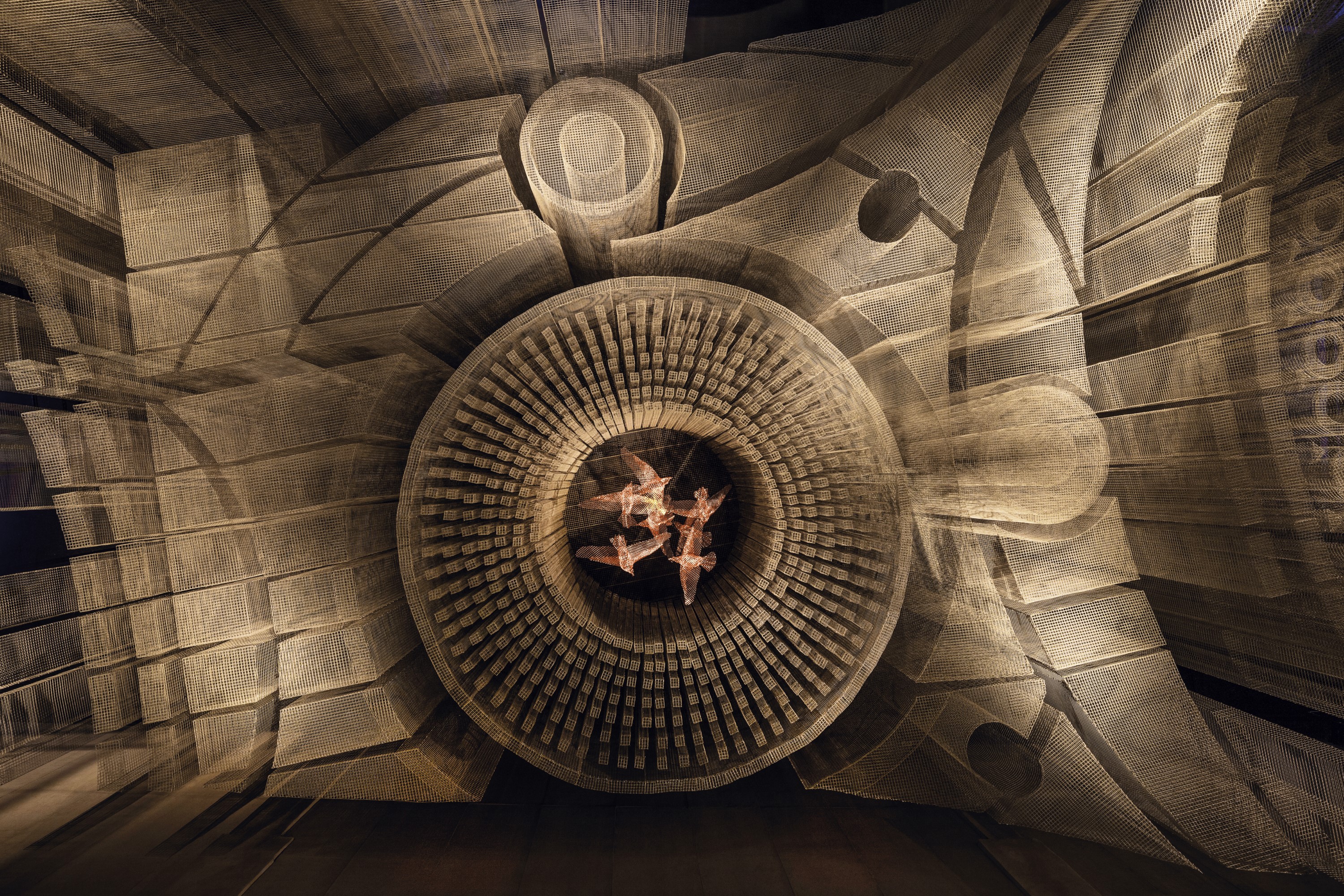

(Cover) Gharfa, STUDIO STUDIO STUDIO (Edoardo Tresoldi in collaboration with Alberonero, Max Magaldi and Matteo Foschi), 2019, temporary installation, Riyadh. Photo by Roberto Conte. (Above) Opera, Edoardo Tresoldi, 2020, permanent installation, Reggio Calabria. Photo by Roberto Conte

A particular function is required to describe the inner state of the system and keep all components synchronised. This function – if it is to constitute a digital twin – must maintain synchronisation with the values of the sensors installed in the real twin, too.

There are two elements of the virtual twin which are equally important: the data collected by the sensors or simulated, and the numerical models emulating the behaviour of the various different components of the system. The models may be based on the first principles, that is, knowledge of the constituent equations describing the behaviour of the subsystem or component (such as the law of gravity, Maxwell’s equations, Navier-Stokes’ equations), the solution of which permits prediction of the motion of the system. Alternatively, the numerical models may be data-driven, meaning that data collected by sensors is used to create an implicit model of behaviour, by means of more or less complex procedures. These range from simple interpolation – in order to understand whether the data describes a straight line or a curve (such as a load curve, the temperature/heat ratio of a part) – to artificial intelligence, which uses very expensive computational procedures to replicate faithfully the states of a subsystem in response to changes in the input data. In this case, artificial intelligence reproduces the load curve without an equation!

All this requires large quantities of data, an appropriate computational infrastructure, special software and, above all, expertise in a number of areas, ranging from the expert of the real system (who may know absolutely nothing about computers and software) to the process and design engineer, the information technologist who writes the software and manages the data, the mathematician or physicist who creates the models, and the expert in computing infrastructure.

If the software allowing the digital twin of a system to operate has been put together properly, it can even be combined with other digital twins to create a system of systems. This is one of the requirements for use of the adjective “smart”: a smart factory is a factory in which multiple items of machinery are modelled using digital twins, and the digital twins of the machines can interact to produce a super-digital twin of the entire factory. This combination allows the factory to interact with workers through a flow of information maximising the efficacy of work, which is increasingly important for safety and sustainability. For example, the digital twin might realise that a component of the system is performing worse than expected (without the need for periodic inspection by an operator) and suggest a solution.

Fillmore, Tresoldi Studio, 2019, permanent installation, New York. Photo by Roberto Conte

A similar situation may arise in a smart city, where a digital twin of the metro system might be combined with one of the bus system and the traffic control system, to provide predictions of travel times and optimise them by sending specific suggestions to users.

It will be several years yet before we see large-scale adoption of these technologies. Not due to technological issues, but because of process requirements, as companies must be ready to accept the changes, the reorganisation of work and the need for new skills which are not easy to locate on the labour market at the moment. Early adopters of these methods will reap the benefits in terms of competitiveness and sustainability, an increasingly important factor in attracting investment. Some companies are already in the advanced stages of adoption of digital twins and other technologies supporting the smart factory, such as the internet of things.

A number of directions for further development of this technology may already be glimpsed. Thanks to computing power and artificial intelligence, we can imagine a digital twin of a virtual system – not yet real – to be used as a design tool. In this case, we will be able to verify the functioning of many different “versions” of the virtual system, simulating different real systems for assessment in the design phase. This will allow us to correct mistakes in advance and optimise the functioning of the real system without having to make expensive physical prototypes. And so, in short, we will have design by simulation, and certification by simulation, thus saving us time and money. We will increasingly be using this technology not only in industry but in all human activities that directly or indirectly produce large quantities of data, as in smart cities.

Moreover, this will permit development of systems that are more resilient, more efficient, safer, and more economical, and will make the human workload lighter.

This is a fantastic, revolutionary technology, but we must resist the temptation to get used to the convenience of using digital twins and give up the effort of studying and understanding complex phenomena and the search for relationships between things. It is not just a matter of theory. If we are not aware of these relationships, how can we study or assess the behaviour of our system under conditions other than those under which the data was collected? Description of a complex phenomenon may in fact be based on very simple relationships. Simple relationships between parts of a system, such as those of the game of life (LIFE) or the spin glass, can explain the source of complex, chaotic behaviour. Giorgio Parisi was recently awarded a Nobel Prize for research in these areas, demonstrating the importance of understanding complex problems and describing them in computable, mathematical terms.

Fillmore, Tresoldi Studio, 2019, permanent installation, New York. Photo by Roberto Conte